Will the Polls Be Wrong Again in 2020

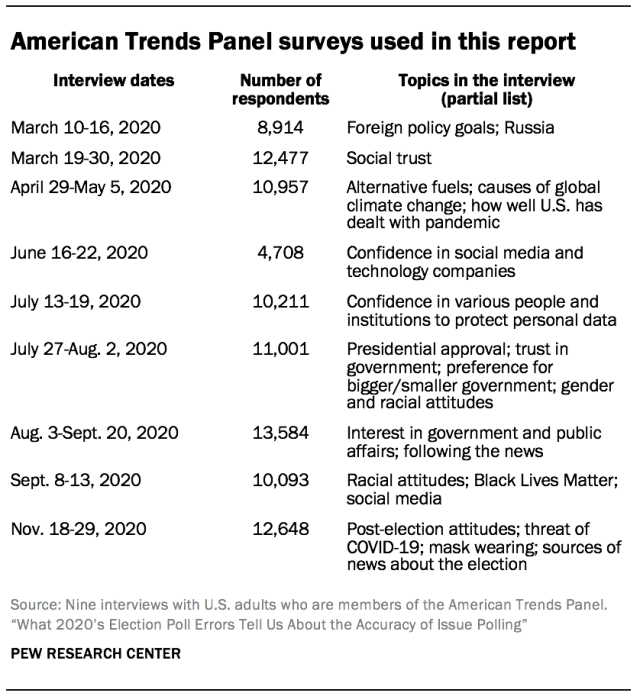

Pew Research Center conducted this study to understand how errors in correctly representing the level of back up for Joe Biden and Donald Trump in preelection polling could affect the accuracy of questions in those same polls (or other polls) that measure public opinion on issues. Specifically, if polls about issues are underrepresenting the Republican base the way that many 2020 preelection polls appeared to, how inaccurate would they be on measures of public opinion about issues? We investigated by taking a set of surveys that measured a wide range of event attitudes and using a statistical procedure known as weighting to have them mirror 2 unlike scenarios. One scenario mirrored the truthful ballot outcome among voters (a four.4-bespeak Biden advantage, and another substantially overstated Biden's reward (a 12-indicate atomic number 82). For this assay, we used several surveys conducted in 2020 with more than 10,000 members of Pew Research Center'south American Trends Console (ATP), an online survey panel that is recruited through national, random sampling of residential addresses that ensures that nearly all U.S. adults take a adventure of selection. Questions in these surveys measured opinions on bug such every bit health care, the proper scope of authorities, immigration, race, and the nation's response to the coronavirus pandemic. These opinions were examined to come across how they differed between the two scenarios.

Most preelection polls in 2020 overstated Joe Biden's lead over Donald Trump in the national vote for president, and in some states incorrectly indicated that Biden would likely win or that the race would be close when it was not. These issues led some commentators to argue that "polling is irrevocably cleaved," that pollsters should be ignored, or that "the polling manufacture is a wreck, and should exist blown up."

The true picture show of preelection polling'due south performance is more nuanced than depicted by some of the early broad-brush postmortems, but information technology is clear that Trump'southward force was not fully accounted for in many, if not most, polls. Election polling, withal, is simply one application of public opinion polling, though apparently a prominent one. Pollsters often point to successes in forecasting elections as a reason to trust polling as a whole. But what is the relevance of ballot polling's problems in 2020 for the rest of what public stance polling attempts to do? Given the errors in 2016 and 2020, how much should we trust polls that attempt to measure opinions on problems?ane

A new Pew Research Center analysis of survey questions from nearly a year's worth of its public opinion polling finds that errors of the magnitude seen in some of the 2020 election polls would alter measures of stance on problems past an average of less than one pct indicate. Using the national tally of votes for president every bit an anchor for what surveys of voters should wait like, assay across 48 issue questions on topics ranging from energy policy to social welfare to trust in the federal authorities found that the mistake associated with underrepresenting Trump voters and other Republicans past magnitudes seen in some 2020 election polling varied from less than 0.v to 3 per centum points, with well-nigh estimates changing inappreciably at all. Errors of this magnitude would not alter any noun interpretations of where the American public stands on of import issues. This does not mean that pollsters should quit striving to take their surveys accurately represent Republican, Autonomous and other viewpoints, but information technology does mean that that errors in ballot polls don't necessarily pb to comparable errors in polling about issues.

How is it possible that underestimating GOP electoral support could have such a pocket-sized affect on questions about problems?

Why did nosotros choose to exam a 12-point Biden lead as the alternative to an accurate poll?

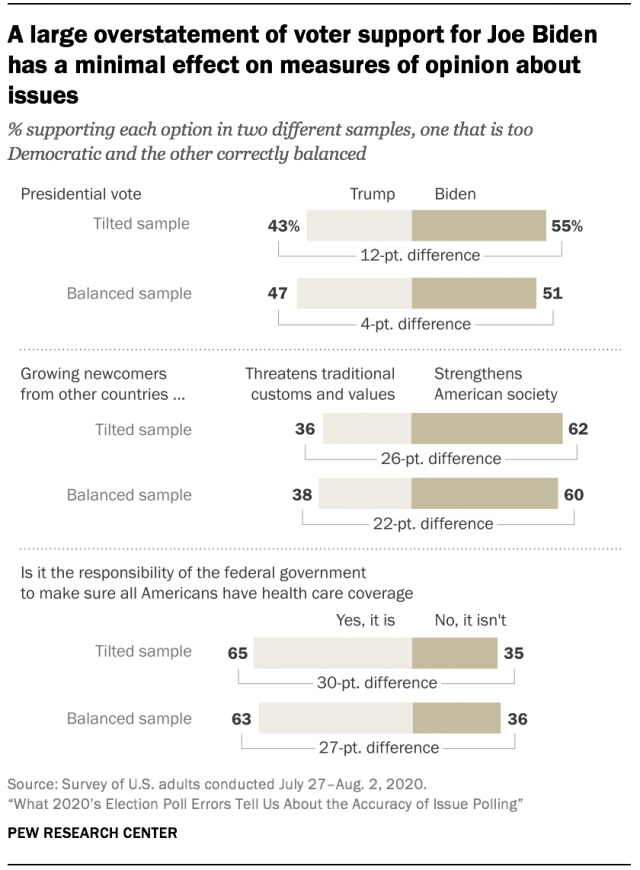

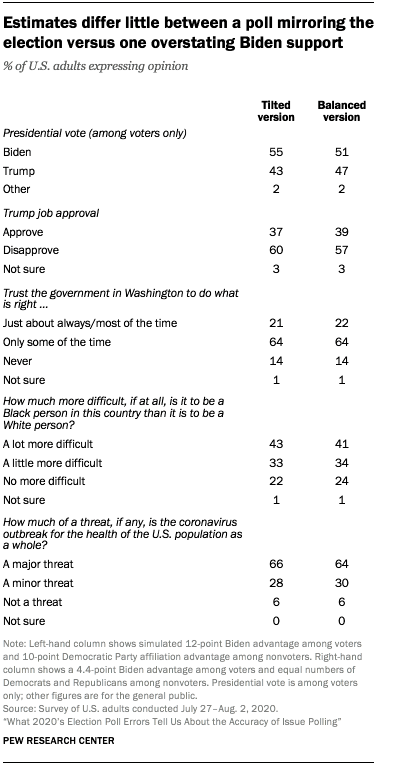

We created a version of our surveys with an overstatement of Biden's advantage in the election (a "tilted version") to compare with a "balanced version" that had the right Biden advantage of 4.4 percentage points. The 12 per centum point Biden lead used in the "tilted" version of the simulation is arbitrary, but it was chosen because it was the largest atomic number 82 seen in a national poll released by a major news arrangement in the ii weeks prior to Election Day, equally documented by FiveThirtyEight. Several polls had Biden leads that were nearly as large during this fourth dimension catamenia. The simulation, including the manipulation of political party affiliation among nonvoters, is described in greater item below.

This finding may seem surprising. Wouldn't a poll that forecast something as large as a 12 percentage point Biden victory also mislead on what share of Americans support the Blackness Lives Affair movement, call back that the growing number of immigrants in the U.S. threatens traditional American customs and values, or believe global climatic change is mostly caused by act?

The accuracy of issue pollingcouldbe harmed by the same problems that affected election polling because support for Trump vs. Biden is highly correlated with party affiliation and opinions on many issues. Pew Research Center has documented the steadily increasing alignment of political party affiliation with political values and opinions on issues, a type of political polarization. It stands to reason that measures of political values and opinions on issues could be harmed by whatever it is that led measures of candidate preference to be wrong.

But "highly correlated" does not mean "the same as." Even on problems where sizable majorities of Republicans and Democrats (or Trump and Biden supporters) line up on opposite sides, there remains more diversity in opinion among partisans nigh issues than in candidate preference. In recent elections, nigh nine-in-10 of those who place with a political party vote for the presidential candidate of that party, a share that has grown over time. Only that high degree of consistency between opinions on problems and candidate preference – or party amalgamation – is rare. That fact limits the extent to which errors in estimates of candidate preference can bear on the accuracy of issue polling.

Visualizing a closely divided electorate

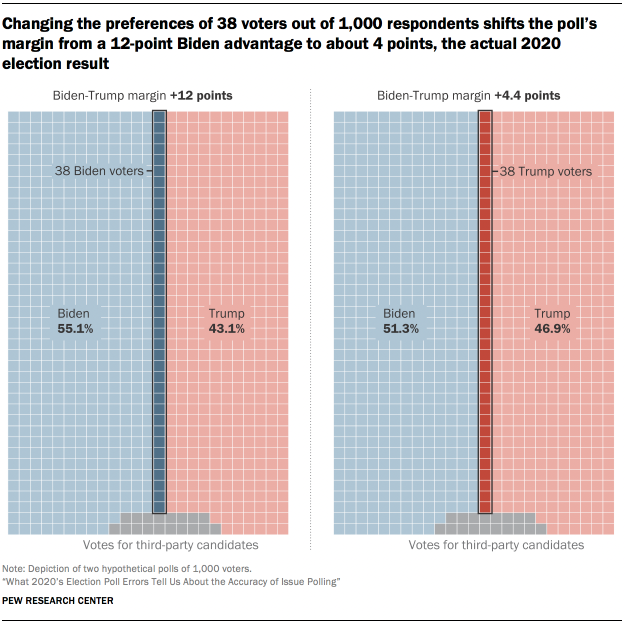

Election polling in closely divided electorates similar those in the U.Due south. right at present demands a very high caste of precision from polling. Sizable differences in the margin between the candidates can result from relatively pocket-size errors in the composition of the sample. Changing a small share of the sample tin make a large difference in the margin between two candidates.

To visualize how few voters demand to change to bear upon the margin between the candidates, consider a hypothetical poll of 1,000 adults. One version shows Biden prevailing over Trump by 12 percentage points (left side of the effigy), while the version on the right shows the accurate election results. Biden voters are shown equally blue squares and Trump voters equally red squares (votes for 3rd-party candidates are shown in gray along the lesser), just the strip in the middle shows the voters who change from the left effigy to the right one.

The version on the correct shows the bodily 2020 election results nationally – a Biden advantage of a petty more than 4 per centum points. The poll on the correct was created past slightly increasing the representation of Trump voters and decreasing the representation of Biden voters, then that overall, the poll changes from a 12-point Biden advantage to a 4-point Biden advantage. This adjustment, in effect, flips the vote preferences of some of the voters. How many voters must be "changed" to move the margin from 12 points to about four points?

The answer is not very many – just 38 of the ane,000, or well-nigh 4% of the total. The Biden voters who are replaced by Trump voters are shown as the dark blue vertical strip in the middle of the left-hand panel of the graphic (12-indicate victory) and dark ruby in the right panel (more modest four-point victory).

In addition to shifting the margin in the race, this change in the sample composition has implications for all the other questions answered by the Trump and Biden voters. The Trump voters, whose numbers take increased statistically, now have a larger voice in questions almost immigration, climate change, the appropriate size and scope of the federal government, and everything else in the surveys. The Biden voters have a correspondingly smaller voice.

Merely every bit may be apparent past comparing the pictures on the left and correct, the two pictures of the electorate are quite similar. They both show that the country is very divided politically. Neither political party has a monopoly on the voting public. Yet, while the partition is fairly shut to equal, it is not completely equal – Republicans exercise not outnumber Democrats amidst bodily voters in either ane. But the margin amidst voters is small-scale. Information technology is this closeness of the political division of the country, even under the scenarios of a sizable forecast mistake, that suggest that conclusions about the broad shape of public stance on issues are not likely to be greatly afflicted past whether election polls are able to pinpoint the margin betwixt the candidates.

Simulating two versions of political support among the public

To demonstrate the range of possible fault in outcome polling that could result from errors like those seen in 2020 election polling, we conducted a simulation that produced two versions of several of our opinion surveys from 2020, like to the manipulation depicted in the hypothetical example shown higher up. Ane version included exactly the correct share of Trump vs. Biden voters (a Biden advantage of 4.4 percentage points) – we will phone call it the "balanced version" – and a second version included besides many Biden voters (a Biden advantage of 12 percentage points, which was the largest atomic number 82 seen in a public poll of a major polling organization's national sample released in the terminal 2 weeks of the campaign, equally documented by FiveThirtyEight). We'll call it the "tilted version."

Simply near all of Pew Research Centre's public stance polling on issues is conducted among thegeneral public and not just amongvoters. Nonvoters make up a sizable minority of full general public survey samples. In our 2020 post-election survey, nonvoters were 37% of all respondents (8% were noncitizens who are ineligible to vote and the residual were eligible adults who reported not voting). It's entirely possible that the same forces that led polls to underrepresent Trump voters would lead to the underrepresentation of Republicans or conservatives among nonvoters. Thus, we need to produce two versions of the nonvoting public to go along with our 2 versions of the voters.

Unlike the situation among voters, where we have the national vote margin every bit a target, we do not accept an agreed-upon, objective target for the distribution of partisanship among nonvoters. Instead, for the purposes of demonstrating the sensitivity of stance measures to changes in the partisan balance of the nonvoter sample, we created a sample with equal numbers of Republicans and Democrats among nonvoters to go with the more accurate ballot outcome (the Biden 4.iv-point margin among voters), and a 10-point Democratic Party amalgamation nonvoter advantage to go with the larger (and inaccurate) 12-indicate Biden margin among voters.2 These adjustments, in issue, simulate unlike samples of the public. In addition to the weighting to generate the candidate preference and political party amalgamation scenarios, the surveys are weighted to be representative of the U.South. adult population past gender, race, ethnicity, pedagogy and many other characteristics.3 This kind of weighting, which is common practice amid polling organizations, helps ensure that the sample matches the population on characteristics that may be related to the opinions people hold.

The simulation takes reward of the fact that our main source of data on public stance is the American Trends Panel, a set up of more than 10,000 randomly selected U.South. adults who accept agreed to take regular online surveys from us. Nosotros conducted surveys with these aforementioned individuals approximately twice per calendar month in 2020, with questions ranging across politics, organized religion, news consumption, economic circumstances, technology use, lifestyles and many more topics. For this analysis, we chose a set of 48 survey questions representing a wide range of important topics on nine different surveys conducted during 2020.

Later the Nov election, we asked our panelists if they voted, and if so, for whom. Nosotros also collect a measure of party affiliation for all panelists, regardless of their voter status. With this data, nosotros tin can manipulate the share of Biden vs. Trump voters in each poll, and Democrats vs. Republicans amid nonvoters, and await back at their responses to surveys earlier in the year to gauge how our reading of public opinion on issues differs in the two versions.

Before describing the results in more detail, information technology's important to exist explicit about the assumptions underlying this exercise. We tin can manipulate the share of voters for each presidential candidate and the share of Democrats and Republicans amongst nonvoters, but the results may not tell the full story if the Trump and Biden voters in our surveys do non accurately stand for their voters in the population. For case, if believers of the cyberspace conspiracy theories known as QAnon are a much higher share of Trump voters in the population than in our panel, that could affect how well our simulation reflects the impact of irresolute the number of Trump voters. The same is truthful for our adjustments of the relative shares of Democrats and Republicans. If the partisans in our panel do non accurately reflect the partisans in the general public, we may non capture the full bear on of over- or underrepresenting i party or the other.

How much can the balance of these two scenarios impact measures of stance on bug?

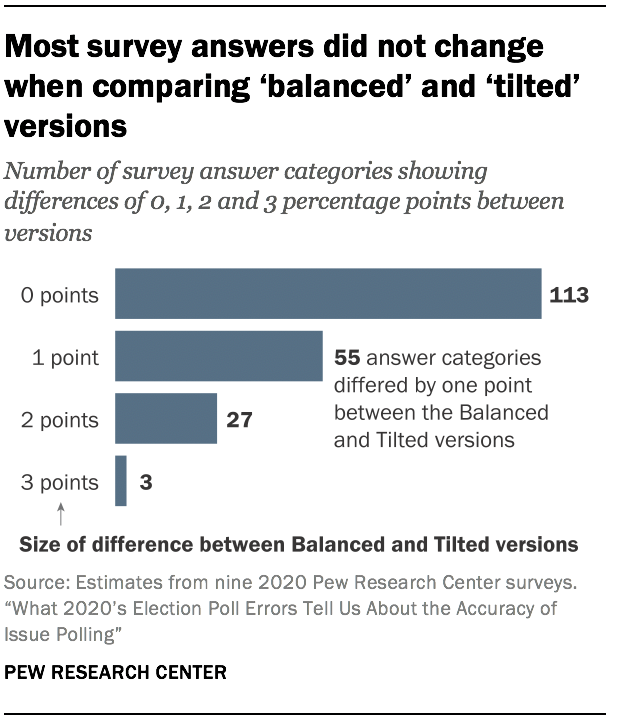

The adjustment from the tilted version (a 12-point Biden reward with a x-indicate Democratic reward in party affiliation among nonvoters) to the balanced version (a 4.iv-indicate Biden advantage with equal numbers of Democrats and Republicans among nonvoters), makes very little difference in the residuum of opinion on issue questions. Across a set of 48 opinion questions and 198 answer categories, almost respond categories changed less than 0.5%. The average change associated with the adjustment was less than 1 percentage point, and approximately twice that for the margin between alternative answers (e.g., favor minus oppose). The maximum change observed across the 48 questions was three points for a item answer and 5 points for the margin between culling answers.

1 iii-betoken difference was on presidential job approval, a measure very strongly associated with the vote. In the balanced version, 39 pct approved of Trump'south task performance, while 58 per centum disapproved. In the tilted version, 36 percent approved of Trump'southward performance and 60 pct disapproved. Two other items also showed a iii-signal difference on ane of the response options. In the balanced version, 54% said that it was a bigger problem for the land that people did not run into racism that was occurring, compared with 57% amongst the tilted version. Similarly, in the balanced version, 38% said that the U.S. had controlled the coronavirus outbreak "every bit much as it could have," compared with 35% who said this in the tilted version. All other questions tested showed smaller differences.

Stance questions on issues that have been at the cadre of partisan divisions in U.S. politics tended to be the just ones that showed any difference between the counterbalanced version and the tilted version. Preference for smaller versus bigger government, a fundamental dividing line between the parties, differed by two points betwixt the versions. Perceptions of the touch of immigration on the country, a core outcome for Donald Trump, also varied past 2 points between the two versions. The belief that man activity contributes "a great bargain" to global climate change was 2 points higher in the tilted version. The share of Americans saying that regime should do more to help the needy was two points higher in the tilted version than the balanced version.

Despite the fact that news audiences are quite polarized politically, there were typically only small differences between the two versions in how many people take been relying on item sources for news in the aftermath of the presidential election. The share of people who said that CNN had been a major source of news almost the presidential election in the period after Election Day was 2 points higher in the tilted version than the balanced version, while the share who cited Play tricks News as a major source was 1 bespeak higher in the balanced version than the tilted version.

The consummate set of comparisons among the 48 survey questions are shown in the topline at the end of this report.

Why don't big differences in candidate preference and party amalgamation issue in big differences in opinions on issues?

Opinions on issues and government policies arestrongly, simply notperfectly, correlated with partisanship and candidate preference. A minority of people who support each candidate do not concur views that are consistent with what their candidate or party favors. Amongst nonvoters, support among partisans for their political party's traditional positions – especially amid Republicans – is even weaker. This fact lessens the bear upon of changing the balance of candidate support and political party affiliation in a poll.

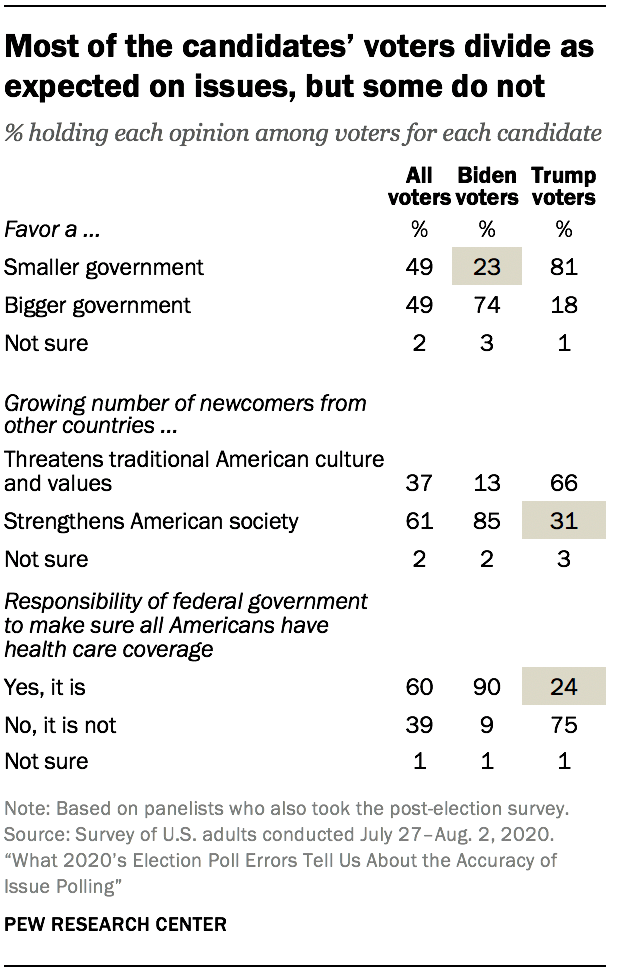

There'south almost never a ane-to-one correspondence between the share of voters for a candidate and the share of people holding a particular stance that aligns with the opinion of that candidate's political party. 3 examples from a summer 2020 survey illustrate the point.

Asked whether they favor a larger government providing more services or a smaller government providing fewer services, nearly one-fourth of Biden's supporters (23%) opted for smaller regime, a position non commonly associated with Democrats or Democratic candidates. On a question nigh whether the growing number of newcomers from other countries threatens American values or strengthens its society, well-nigh ane-third of Trump'southward supporters (31%) take the pro-immigrant view, despite the fact that the Trump administration took a number of steps to limit both legal and illegal immigration. And most one-fourth of Trump's supporters (24%) say that it is the responsibleness of the federal regime to make sure all Americans have health intendance coverage, inappreciably a standard Republican Party position.

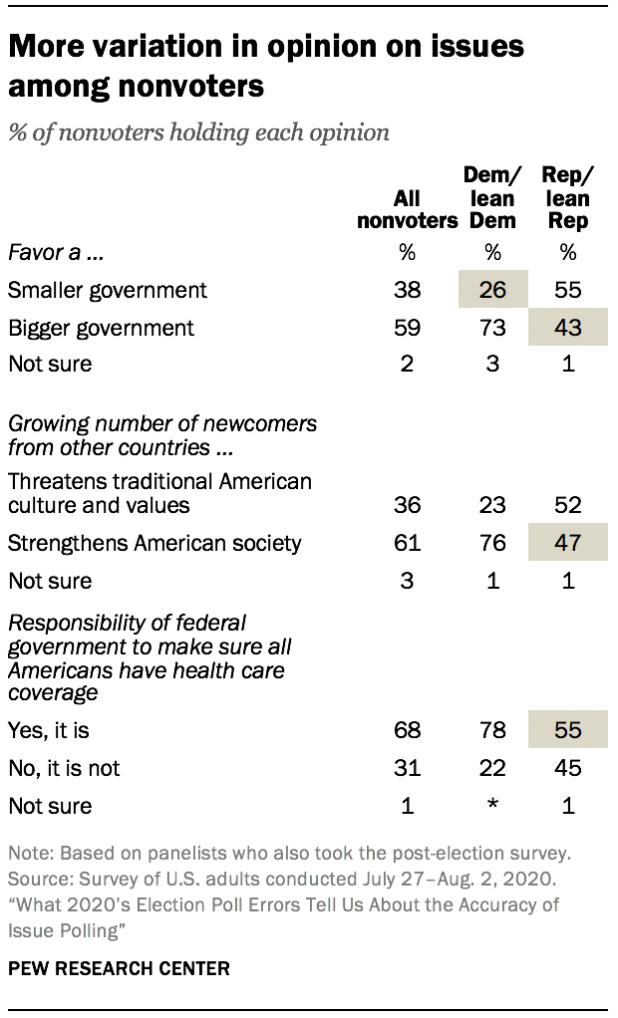

Shifting the focus to party affiliation among nonvoters, nosotros run into even less fidelity of partisans to effect positions typically associated with those parties. For example, nearly half of Republicans and independents who lean Republican but did not vote (47%) said that the growing number of immigrants from other countries strengthens American society. And 43% of them favor a larger government providing more than services. A 55% majority of Republican nonvoters in this survey believe that it is the responsibility of the federal authorities to make sure that all Americans accept wellness insurance coverage. This is still considerably smaller than the share of Democratic nonvoters who think the government is responsible for ensuring coverage (78%), but it is far more than we run across among Republican voters.

These "defectors" from the party line, in both directions and among both voters and nonvoters, weaken the ability of changes in the partisan or voting limerick of the sample to affect the opinion questions. Adding more Trump voters and Republicans as well does add together more skeptics about clearing, but nearly a 3rd of the boosted Trump voters say immigrantsstrengthen American club, a view shared by about half of Republican nonvoters. This ways that our survey question on immigration does not modify in lockstep with changes in how many Trump supporters or Republicans are included in the poll. Similarly, the Biden voter grouping includes plenty of skeptics virtually a larger regime. Pump up his support and you get more supporters of bigger government, only, on balance, not as many as you might expect.

We want different things from opinion polls and election polls

Not all applications of polling serve the same purpose. We expect and need more than precision from election polls considering the circumstances demand it. In a closely divided electorate, a few pct points matter a bang-up deal. In a poll that gauges opinions on an issue, an mistake of a few percentage points typically will not matter for the conclusions we draw from the survey.

Those who follow election polls are rightly concerned about whether those polls are however able to produce estimates precise plenty to draw the balance of support for the candidates. Election polls in highly competitive elections must provide a level of accuracy that is difficult to achieve in a earth of very low response rates. Only a small share of the survey sample must modify to produce what we perceive equally a dramatic shift in the vote margin and potentially an incorrect forecast. As was shown in the graphical simulation before, an error of 4 percentage points in a candidate'southward back up can mean the departure betwixt winning and losing a close election. In the context of the 2020 presidential election, a change of that modest size could accept shifted the outcome from a spot-on Biden lead of 4.4 points to a very inaccurate Biden atomic number 82 of 12 points.

Differences of a magnitude that could make an election forecast inaccurate are less consequential when looking at issue polling. A flip in the voter preferences of iii% or 4% of the sample tin change which candidate is predicted to win an election, simply it isn't enough to dramatically change judgments nigh opinion on most issue questions. Different the measurement of an intended vote choice in a close election, the measurement of opinions is more subjective and likely to be afflicted by how questions are framed and interpreted. Moreover, a total agreement of public opinion about a political outcome rarely depends on a single question similar the vote pick. Often, multiple questions probe different aspects of an issue, including its importance to the public.

Astute consumers of polls on problems usually understand this greater complexity and subjectivity and factor information technology into their expectations for what an issue poll can tell them. The goal in result polling is ofttimes not to become a precise percentage of the public that chooses a position just rather to obtain a sense of where public opinion stands. For case, differences of 3 or four percentage points in the share of the public maxim they would adopt a larger government providing more services matter less than whether that is a viewpoint endorsed past a big majority of the public or past a minor minority, whether information technology is something that is increasing or decreasing over fourth dimension, or whether it divides older and younger Americans.

How do nosotros know that issue polling – fifty-fifty by the unlike or more lenient standards nosotros might employ to them – is authentic?

The reality is that nosotros don't know for sure how authentic outcome polling is. But skillful pollsters accept many steps to ameliorate the accuracy of their polls. Good survey samples are usually weighted to accurately reflect the demographic composition of the U.S. public. The samples are adjusted to friction match parameters measured in loftier-quality, high response charge per unit authorities surveys that can be used as benchmarks. Many opinions on issues are associated with demographic variables such as race, education, gender and age, just as they are with partisanship. At Pew Research Center, we likewise conform our surveys to friction match the population on several other characteristics, including region, religious amalgamation, frequency of cyberspace usage, and participation in volunteer activities. And although the assay presented here explicitly manipulated party affiliation among nonvoters as function of the experiment, our regular approach to weighting also includes a target for party amalgamation that helps minimize the possibility that sample-to-sample fluctuations in who participates could innovate errors. Collectively, the methods used to align survey samples with the demographic, social and political profile of the public help ensure that opinions correlated with those characteristics are more than authentic.

As a result of these efforts, several studies have shown that properly conducted public stance polls produce estimates very similar to benchmarks obtained from federal surveys or authoritative records. While not providing direct evidence of the accuracy of measures of stance on issues, they suggest that polls tin accurately capture a range of phenomena including lifestyle and health behaviors that may be related to public opinion.

Only it'southward too possible that the topics of some opinion questions in polls – even if not partisan in nature – may be related to the reasons some people choose not to participate in surveys. A lack of trust in other people or in institutions such as governments, universities, churches or scientific discipline, might be an example of a phenomenon that leads both to nonparticipation in surveys and to errors in measures of questions related to trust. Surveys may have a smaller share of distrusting people than is likely truthful in the population, and and so measures of these attitudes and anything correlated with them would be at to the lowest degree somewhat inaccurate. Polling professionals should exist mindful of this type of potential error. And we know that measures of political and civic engagement in polls are biased upwards. Polls tend to overrepresent people interested and engaged in politics as well as those who have part in volunteering and other helping behaviors. Pew Research Middle weights its samples to address both of these biases, but there is no guarantee that weighting completely solves the problem.

Does any of this suggest that nether-counting Republican voters in polling is acceptable?

No. This analysis finds that polls about public opinion on issues tin be useful and valid, even if the poll overstates or understates a presidential candidate's level of support by margins seen in the 2020 election. But this does non mean that pollsters should quit striving to take their surveys accurately stand for Republican, Democratic and other viewpoints. Errors in the partisan limerick of polls tin go in both directions. As recently as 2012, ballot polls slightly underestimated Barack Obama's back up.

Despite cautions from those inside and outside the profession, polling volition proceed to be judged, fairly or not, on the operation of preelection polls. A continuation of the recent underestimation of GOP electoral support would certainly do further impairment to the field's reputation. More fundamentally, the goal of the public opinion research customs is to represent the public'due south views, and anything within the profession's control that threatens that goal should exist remedied, even if the consequences for estimates on topics other than election outcomes are small. Pew Research Center is exploring means to ensure we attain the right share of Republicans and that they are comfortable taking our surveys. We are also trying to continuously evaluate whether Republicans and Trump voters – or indeed, Democrats and Biden voters – in our samples are fully representative of those in the population.

Limitations of this analysis

1 strength of this analysis is that the election is over, and information technology's not necessary to guess at what Trump back up ought to accept been in these surveys. And by using respondents' cocky-reported vote choice measured later the ballot, we avoid complications from respondents who may have changed their minds between taking the survey and casting their election.

However, this study is not without its limitations. It's based on polls conducted by only one organization, Pew Research Eye, and these polls are national in scope, dissimilar many election polls that focused on private states. The underlying machinery that weakens the association betwixt levels of candidate support (or party affiliation) and opinions on bug should apply to polls conducted by any organization at any level of geography, merely nosotros examined it using only our surveys.

Some other important assumption is that the Trump voters and Biden voters who agreed to exist interviewed are representative of Trump voters and Biden voters nationwide with respect to their opinions on bug. We cannot know that for sure.

Source: https://www.pewresearch.org/methods/2021/03/02/what-2020s-election-poll-errors-tell-us-about-the-accuracy-of-issue-polling/

0 Response to "Will the Polls Be Wrong Again in 2020"

Postar um comentário